Key Takeaways

- Agentic AI is one of the most important emerging trends in enterprise AI, from trading to robotics to autonomous operations.

- Aethir’s decentralized GPU cloud is a natural fit for AI agents that need real-time, programmable GPU inference.

- In 2026 and beyond, Aethir can enable agentic AI to autonomously book, pay for, and optimize enterprise GPU compute usage in real time.

- This vision sets the stage for a self-regulating, tokenized compute economy where AI agents manage GPU resources on behalf of enterprises.

Agentic AI, powered by AI agents capable of autonomous decision-making, negotiation, and resource acquisition is quickly becoming one of the most transformative innovations in the AI sector. Industries such as healthcare, robotics, video production, finance, and infrastructure can all benefit as agents handle complex task sequences, collaborate with other AI systems, and manage resources without human intervention. These agents are also extremely compute-intensive, requiring constant access to premium, reliable GPU inference.

Aethir’s decentralized GPU cloud has the resources and expertise to support agentic AI booking GPU inference in real time. AI agents can secure enterprise-grade GPU inference via Aethir’s decentralized GPU cloud, along with smart-contract-based GPU pricing and SLAs for AI workloads. Aethir has a proven track record of supporting Agenti Ai, with 25+ AI agent projects receiving hands-on support from our $100m Ecosystem Fund so far.

In a world where AI agents are integrated across a broad range of industries, the idea of agentic AI booking compute on Aethir’s decentralized GPU cloud for enterprise AI workloads is quite plausible. As developers introduce more advanced agentic AI features such as DeFAI management and transactions, AI agents can also become an integral part of enterprise compute booking pipelines.

Imagine how autonomous AI agents may book compute on a decentralized GPU marketplace, powered by Aethir’s enterprise-grade GPU-as-a-Service in 2026.

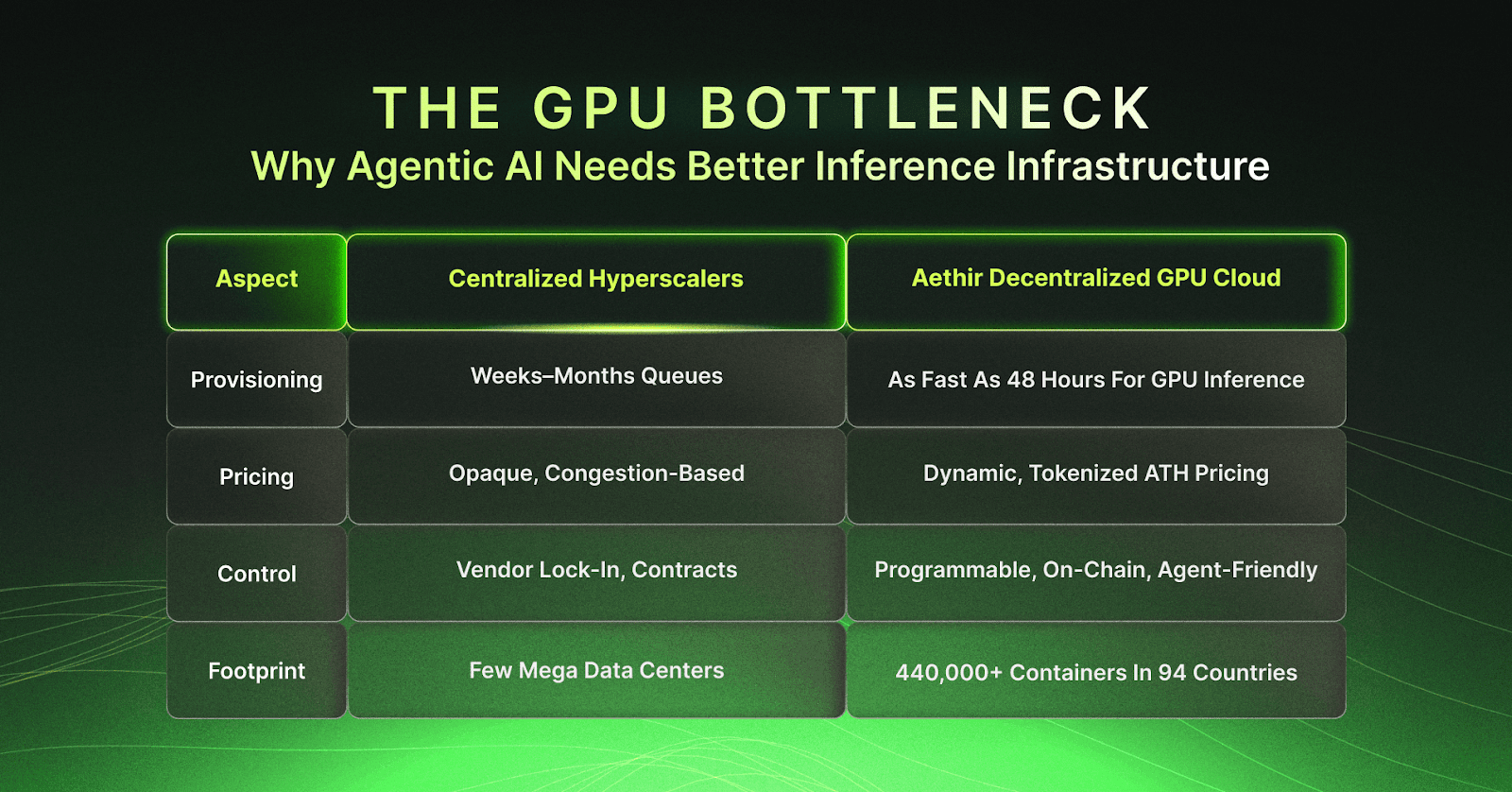

The GPU Bottleneck: Why Agentic AI Needs Better Inference Infrastructure

Centralized Cloud vs Aethir for Agentic AI Compute

Aethir’s decentralized GPU cloud is built to provide reliable, scalable, and cost-effective GPU inference through its massive global network of 440,000+ GPU Containers, distributed across 94 countries and 200+ locations.

AI enterprises need streamlined access to high-performance GPU compute. Still, centralized hyperscaler clouds have numerous limitations, including high costs, vendor lock-in risks, supply chain bottlenecks, and long wait times. Centralized providers come with inefficiencies in compute liquidity, poor GPU utilization, and congestion-based pricing.

Agentic AI can’t operate smoothly in a slow, centralized, and human-mediated procurement system. AI compute markets need to be easily accessible and practical. AI agents need programmable, liquid, decentralized GPU markets where agents can transact autonomously in milliseconds. This is great for all types of AI workloads, whether a single autonomous agent or a large-scale AI inference project.

Aethir’s decentralized GPU cloud model uses a tokenized compute architecture, in which ATH tokens are used to pay and book compute from a global pool of independent Cloud Hosts. Our Cloud Host network is decentralized and has reached an annual recurring revenue (ARR) of $147m by providing compute services to a growing roster of 150+ AI, Web3, and gaming clients.

With Aethir’s decentralized approach, AI enterprises gain immediate access to GPU inference, rather than waiting weeks or months. Imagine an AI research assistant that detects rising inference demand and autonomously secures GPU compute. With Aethir, this is possible.

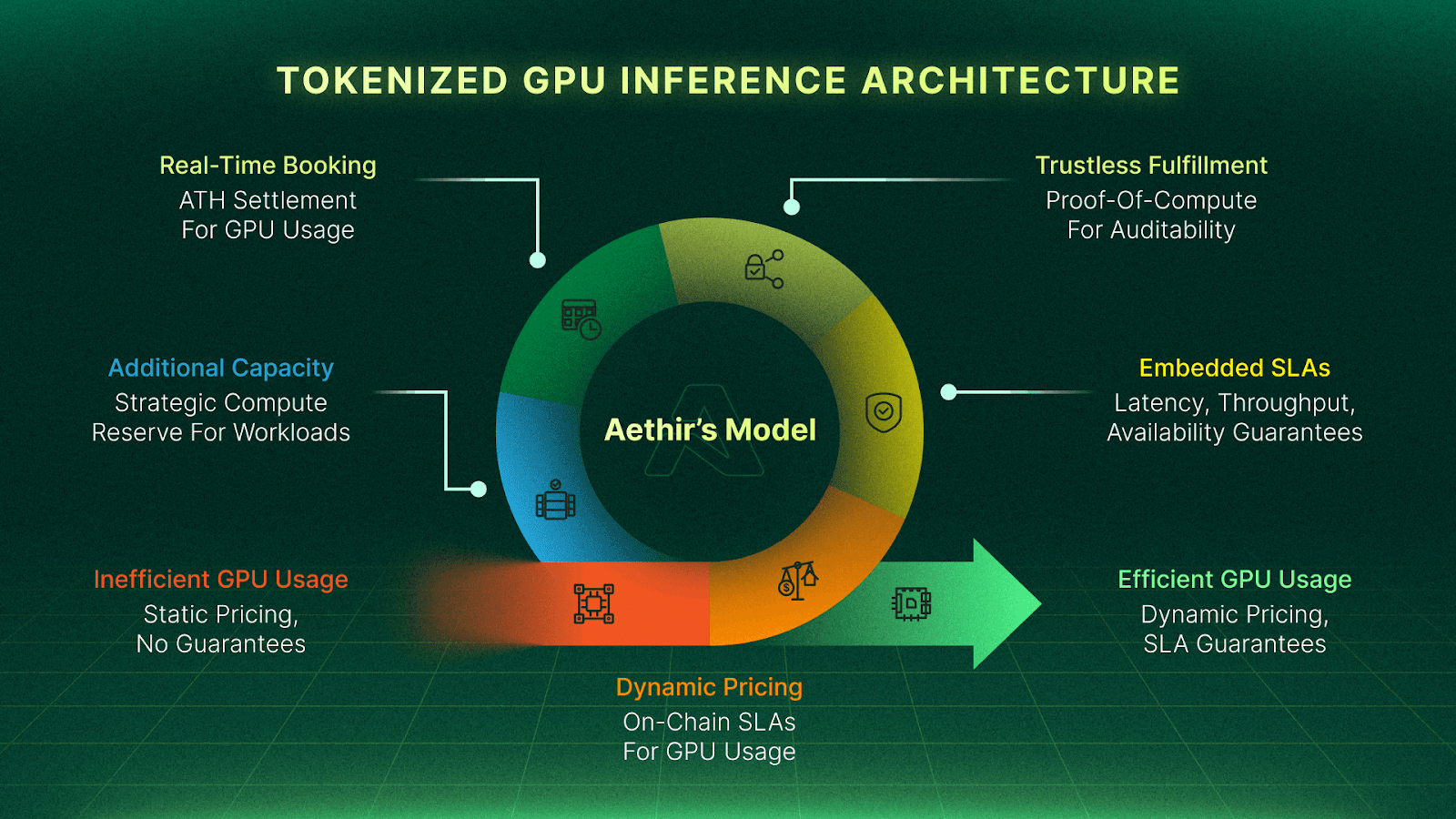

Inside Aethir’s Tokenized GPU Inference Architecture for Agentic AI

Aethir uses an entirely different approach compared to centralized hyperscaler clouds like Google Cloud, AWS, or Microsoft Azure. These big-tech providers concentrate tens of thousands of GPUs in massive data centers and service their clients from regional hubs. This means the same data centers serve both clients in their vicinity and those in different cities. Furthermore, centralized GPU inference depends on traditional purchasing channels.

With Aethir, clients can access decentralized AI compute markets and leverage ATH tokens to book compute. This model is Web3-native, but also offers versatile purchasing options for more traditional, Web2 enterprises in need of premium cloud computing services.

The computing resources in Aethir’s decentralized GPU cloud are distributed across numerous Cloud Hosts, eliminating single points of failure and supply chain bottlenecks. When our clients need more compute, we simply channel it from additional Cloud Hosts, straight to where it’s needed.

Aethir’s model enables:

- Dynamic GPU pricing and on-chain SLAs (latency, throughput, availability)

- Embedded SLAs (latency, throughput, availability)

- Trustless fulfillment and audit via Proof-of-Compute

- Real-time GPU booking and settlement in ATH

A possible 2026 agent flow:

- AI agent detects rising inference demand.

- Agent calls Aethir’s marketplace contract with required SLA and budget.

- Routing logic selects optimal GPU containers across the network.

- Checker Nodes verify performance and correctness.

- Settlement clears in ATH, and usage metrics update in real time.

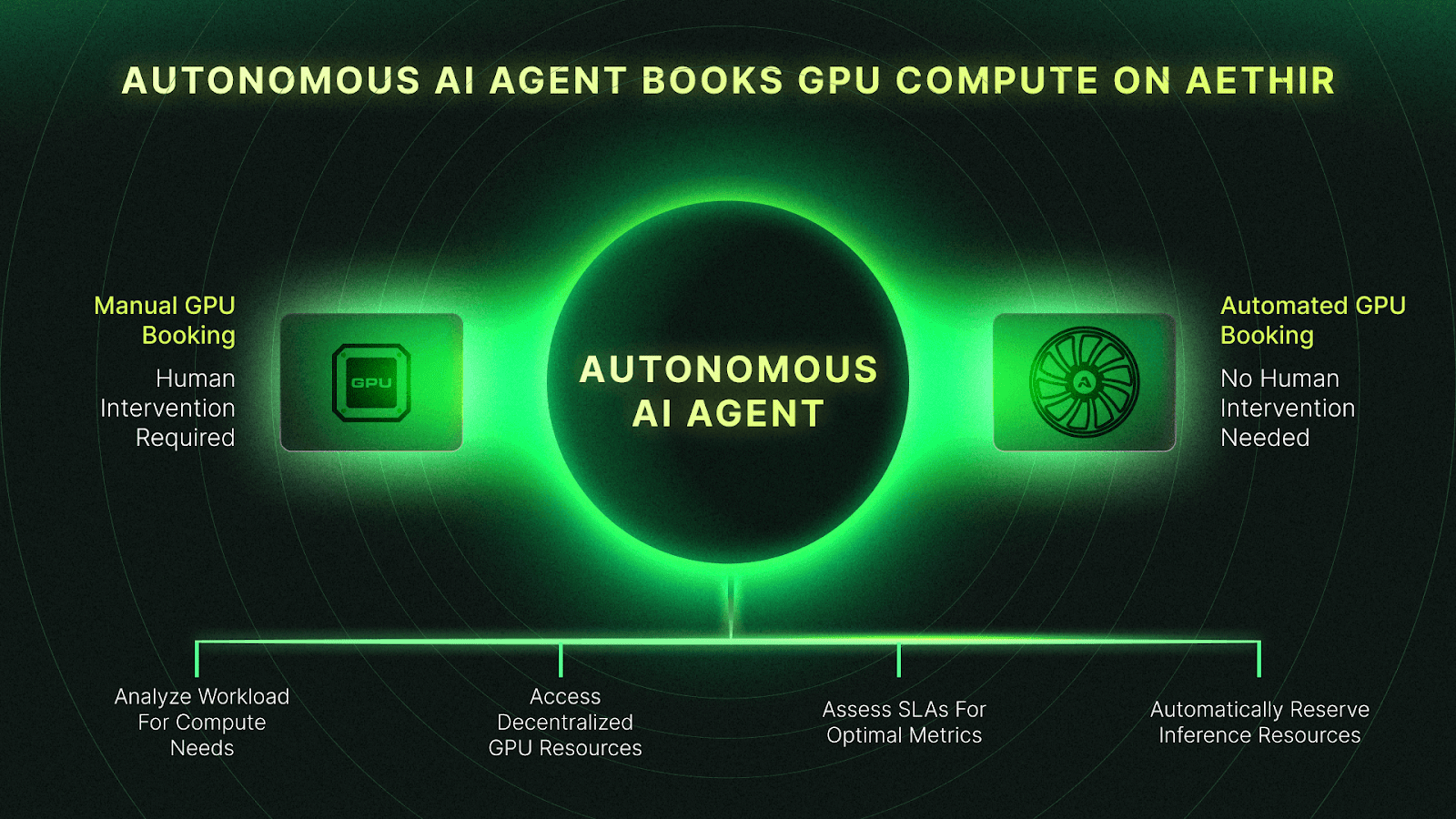

How Autonomous AI Agents Could Book GPU Compute on Aethir in 2026

Aethir’s decentralized GPU cloud has the technical capabilities to empower agentic AI for real-time GPU inference booking. The Aethir network leverages blockchain technology for booking and paying compute with ATH tokens, making it an ideal choice for AI agent integrations.

Examples of agentic AI use cases on Aethir’s AI infrastructure in 2026:

- An AI trading agent that scales inference capacity during market volatility and automatically releases it when volume drops.

- A robotics control agent that books low-latency GPUs in specific regions to coordinate fleets in real time.

- A video-generation pipeline that schedules bursts of compute for content rendering when prices are most favorable.

A fully autonomous AI trading agent can be trained to handle the entire compute demand lifecycle for companies. Such AI agents need to be able to:

- Detect inference demand based on workload.

- Use Aethir’s decentralized GPU marketplace.

- Evaluate cost-performance metrics using on-chain SLAs.

- Use ATH staking mechanisms for guaranteed bandwidth.

- Automatically book GPU inference capacity without human intervention.

By integrating agentic AI for compute booking and monitoring, enterprises can automate their cloud computing operations and rest assured that their AI apps and projects will always have access to the processing they need. With distributed GPU infrastructure under the hood, agentic AI has the GPU inference pool required to support enterprise clients with high-performance computing at all times.

The agentic AI compute booking model uses:

- Predictive compute provisioning

- On-chain routing logic

- Automatic performance rebalancing when better pricing emerges

- Proof-of-compute verification ensuring accuracy and ROI

Furthermore, AI agents can rebalance assets, stake compute, and resell surplus, creating a self-adjusting compute economy that aligns perfectly with Aethir’s decentralized GPU cloud computing model.

Toward a Self-Regulating Agentic AI Compute Economy on DePIN

Agentic AI and decentralized compute fit like two puzzle pieces. While Aethir’s decentralized GPU cloud provides high-performance computing for AI enterprises, AI agents can manage, book, and coordinate compute consumption for clients. It’s like an automated mechanism for optimizing and paying for GPU compute without human involvement.

In 2026, this vision may become a reality given the tremendous advancements in agentic AI and machine learning. On the other hand, leveraging Aethir’s AI compute markets is a logical step when it comes to tokenized compute for agentic AI demand forecasting and routing.

Instead of dealing with slow and expensive centralized cloud systems, agentic AI can leverage Aethir’s decentralized GPU cloud and reap major benefits for clients, including:

- Reduced cost

- Better performance

- Elimination of provisioning delays

Aethir’s decentralized GPU cloud ensures enterprise-grade reliability, while tokenized compute enables transparent, algorithmic pricing. This model reshapes AI compute markets: from static infrastructure to autonomous economic systems, and agentic AI will be at the forefront of everyday compute booking.

If you are building agentic AI systems, autonomous trading agents, or AI-native operations tools, Aethir’s decentralized GPU cloud provides the programmable compute layer you need.

Discover Aethir’s decentralized GPU cloud offering for enterprises here.

Explore our official blog to learn more about Aethir’s AI, Web3, and gaming infrastructure.

FAQs

Why is agentic AI a strong fit for Aethir’s decentralized GPU cloud?

Agentic AI requires fast, programmable, and reliable GPU inference, which Aethir provides via a globally distributed, tokenized GPU marketplace with embedded SLAs and significantly lower costs than traditional cloud providers.

How could agentic AI book GPU compute on Aethir in 2026?

AI agents can interact with smart contracts, pass workload and SLA parameters, and use ATH tokens to reserve and verify GPU inference capacity across 440,000+ containers in seconds.

What advantages does Aethir offer over centralized cloud providers for agentic AI?

Immediate access, global distribution, dynamic pricing, no vendor lock-in, higher utilization, and programmable, on-chain interfaces designed for machine-to-machine transactions.

.jpg)

_Blog_1910x1000_V3.jpg)